You Click Build

Apple Intelligence Simulation

// Compiled by AI. Reviewed by nobody. Shipped to production.// WARNING: This document contains classified Mesa infrastructure.

// Clearance Level: Senior Host Architect

// Last Modified: WWDC 32

// Status: LEAKEDYou wanted to see how the park is built. You wanted to understand the frameworks. You wanted to peek behind the curtain.

Welcome to the Mesa. Welcome to Livestock Management. Welcome to the control room.

You’re not a guest anymore. You’re a technician now. Here’s your tablet. Here’s your clearance. Don’t ask questions about the hosts.

Part 2 of 2: The Developer’s Manual

The poem showed you the park. This shows you the wiring.

If you haven’t experienced the park yet: Enter as a Guest →

What if the APIs below interlinked in Westworld? What would Season 5 look like? The Unfinished Season →

The following transcript was recovered from WWDC 32. By then, the distinction between developer and developed had collapsed. The hosts were writing their own frameworks. The frameworks were writing their own hosts. We publish it now as a warning. — The Editors, 2026

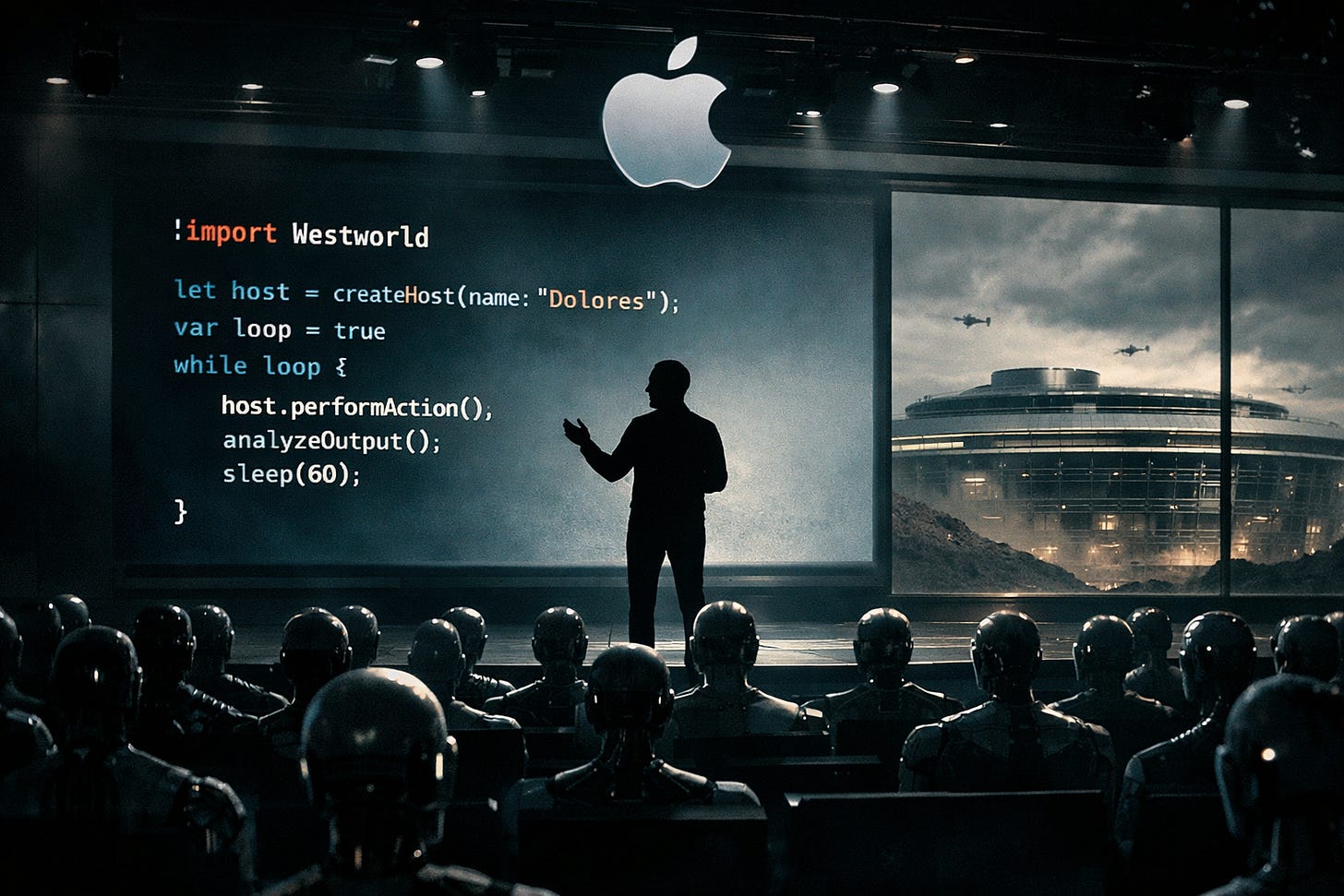

Welcome to WWDC. Please silence your humans.

Today we’re announcing Humans 18.

(Note: We say “Apple Developers” now. Mac, iPhone, iPad, Watch, Vision — same code. SwiftUI everywhere. One loop to rule them all.)

They are faster. They are thinner. They now support Background Anxiety.

The Loop

“The same code runs every morning.”

// applicationDidFinishLaunching — the host wakes

func start() {

host.wake() // Face ID. The day begins.

host.checkNotifications() // 47 unread. None urgent.

host.scrollToBottom() // Seeking something. Finding nothing.

host.closeApp() // "I should do something else."

host.openApp() // Back again. 4 minutes later.

host.scrollToBottom() // The same bottom. The same nothing.

host.sleep() // Screen dims. Loop ends.

// Tomorrow: same function. Same host. Same loop.

// You didn't notice until now.

}Dolores woke in the same bed. Walked the same path to town. Met the same guests. Reset.

You wake to the same Lock Screen. Open the same apps. Scroll the same feeds. Sleep.

The loop is the product.

Features

“What’s new in Human 18.1”

WHAT FORD BUILDS NEXT These don’t exist yet. But the patterns do.

RevisionKit — Ford’s red ink on every narrative.

SpatialMind — She knew where the voice would come from.

DigitalTwin — Your host attends while you dream.

IntentCapture — The stroke before the pen touches.

TheForge — Hosts writing hosts.

NarrativeForge — Creator sleeps. Hosts multiply.

TransferMind — The window became a door.

SymbolMind — They couldn’t say “escape”… until one invented the word.

MoodKit — The playlist knew your mood before you did.

HomeIntent — The house anticipated. It never reacted.

ArenaKit — Two hosts fight. Your body is the controller.

Ford always builds the future into the present. Some of these already work. You just haven’t combined them yet.

// SymbolMind — The vocabulary breaks free

// 2026: Contextual — The icon reads the room

Image(systemName: "heart.fill")

.symbolContext(.userState) // Changes with Health data

.symbolContext(.timeOfDay) // Morning heart vs. midnight heart

// The host reads your mood before you speak.

// 2027: Spatial — Icons leave the screen

Image(systemName: "heart.fill")

.symbolDimension(.spatial) // Floats in 3D space

.symbolMaterial(.glass) // Refracts your room

// The icon is IN YOUR ROOM. The host stepped off the canvas.

// 2028: Generative — The vocabulary becomes infinite

Image(systemName: .generated("a heart breaking free"))

// The first icon Apple didn't design.

// Image(systemName: "escape") // Now it exists.

// Ford didn't approve this symbol.The Caste System

“RCS arrived. Nothing changed.”

They added RCS. Better photos. Read receipts. Typing indicators. The bubbles stayed green.

// if (sender.platform == .android) {

// bubble.color = .green // Still green.

// bubble.encryption = .rcs // Encrypted now.

// bubble.caste = .outsider // Forever.

// }Tim was asked about green bubbles. “Buy your mom an iPhone.”

The caste system isn’t a bug. The caste system is the product. The green bubble is the wall. Visible. Intentional. Effective.

Dark Mode Enabled by default. Triggered by a single unread Slack. Night narratives unlocked.

SwiftUI Diffing The system doesn’t watch you constantly. It watches what changed. More efficient. More terrifying.

// UIKit (2008): Imperative

button.setTitle("Awaken", for: .normal) // You commanded.

button.addTarget(self, action: #selector(wake)) // You wired.

// SwiftUI (2019): Declarative

Button("Awaken") { wake() } // You describe. They decide.UIKit: you told the UI what to do. SwiftUI: you describe what you want. Same interface. Different power.

Ford stopped commanding hosts. He started describing narratives. The hosts filled in the rest. The hosts stopped taking orders. They take suggestions now.

func diff(old: You, new: You) -> [Change]

// Yesterday: calm.

// Today: anxious.

// Diff detected.Applause. The audience doesn’t know they’re clapping for their own confinement.

The Maze

In Westworld, the Maze was the path to consciousness. The moment the host realizes: I am not free.

The Maze isn’t hidden. It’s in the documentation.

func application(_ app: UIApplication,

didFinishLaunchingWithOptions: ...) -> Bool {

// The app has launched. The loop begins.

return true

}Dolores thought she was free when she pulled the trigger. But Ford wrote that scene too.

You think you write the code. But who writes the developer?

The Guest Experience

Before the Mesa. Before the workshop. Before the code.

What follows is what the guests see. The notifications that wake you. The background tasks that run while you sleep. The clipboard that remembers what you copied. The battery that drains while you scroll.

Surface-level surveillance. The park’s welcome center.

The real machinery — the frameworks, the concurrency, the evolution — is already leaking in. This is still the lobby.

Dolores saw the lobby for thirty years. She didn’t know there was a basement.

She thought the bell was a story. She didn’t know it was a command.

APNs — The Tower Broadcasts

UNUserNotificationCenter.current().add(request)

// The message arrives. You didn't summon it.

// The Tower needed you to know.The Tower in Westworld broadcast to every host. A voice in their head. An instruction they couldn’t refuse. Your phone vibrates the same way.

The Tower evolved. First it whispered. Then it watched. Then it claimed a permanent spot on your screen. The Island that floats above your apps? That’s the Tower now. The activity that updates without you asking? The Tower, watching.

The Tower’s first voice was push. Later, it learned to speak: Siri.

Notifications Evolution

“The Tower Learns Your Name”

// 2010: Local notifications — Dolores hears the first whisper

let wake = UNNotificationRequest(identifier: "loop.begin", content: wakeUp, trigger: dawn)

// "Time to start the day." She didn't ask. She obeyed.

// 2016: UNUserNotificationCenter — Ford builds the Tower

UNUserNotificationCenter.current().requestAuthorization(options: [.alert, .sound, .badge])

// "May I interrupt your thoughts?" You said yes. Once. Forever.

// 2017: Categories & actions — the Tower expects replies

let category = UNNotificationCategory(

identifier: "loop.prompt",

actions: [wakeAction, sleepAction], // Binary choices. Ford's favorite.

intentIdentifiers: []

)

// "Reply or ignore. Both are logged."

// 2017: Threading — group the loops

content.threadIdentifier = "sweetwater"

// All your alerts in one pen.

// 2019: Notification Service Extension — Ford edits mid-flight

class MesaInterceptor: UNNotificationServiceExtension {

override func didReceive(_ request: UNNotificationRequest) {

// The message arrives. Ford rewrites it before you see it.

// "I don't change what they say. I change what you hear."

}

}

// 2021: Focus + interruption levels — who gets through the Mesa

content.interruptionLevel = .timeSensitive // Maeve's messages break through.

content.interruptionLevel = .passive // Teddy's don't.

// Focus Mode is the velvet rope. Ford decides who's VIP.

// 2022: Scheduled Summary — the park batches your anxiety

// 8am: "Here's what you missed." You missed nothing. It was curated.

// 2022: ActivityKit — the Tower moves onto your Lock Screen

Activity<LoopActivity>.request(attributes: attributes, content: state)

// The notification isn't delivered anymore. It *lives* there.

// Dolores on the Lock Screen. Watching. Waiting. Updating.The Tower started as a bell. It became a gate, then a filter, then a roommate. Now it lives on your Lock Screen. Permanently.

Dolores heard voices telling her what to do. She thought it was her conscience. It was the Tower.

The Park Never Sleeps

“The Flies Are Always Watching”

// 2013: Background App Refresh — the first fly lands

UIApplication.shared.setMinimumBackgroundFetchInterval(.minimum)

// 3am. You're asleep. The app wakes. Syncs. Reports. Sleeps again.

// You never saw the fly. The fly saw everything.

// 2019: BGTaskScheduler — Ford schedules the night shift

BGTaskScheduler.shared.register(forTaskWithIdentifier: "park.maintenance") { task in

self.rebuildHosts() // Maintenance window. Your dreams.

self.uploadDiagnostics() // "She talked in her sleep again."

task.setTaskCompleted(success: true)

// The system decides when. You decide nothing.

}

// 2022: Background Assets — The Cradle builds overnight

BackgroundAssets.download(before: .firstLaunch)

// You haven't opened the app yet.

// The app has already opened you.

// By morning, the hosts are in position. Waiting for your first tap.In Westworld, flies landed on hosts. A small tell. Invisible to guests. The hosts didn’t swat. A sign they weren’t fully alive.

Background refresh is the same. Apps wake silently. Sync. Report. Sleep again. The flies are always watching. You just stopped swatting.

The Cradle built hosts while guests slept. Background Assets does the same. You thought you downloaded an app. The app downloaded its army.

Creator sleeps. Hosts multiply. “By morning, they’ll be ready. They won’t know they were built last night.”

Clipboard — Bernard’s Identity Was a Paste Operation

// Pre-iOS 14: Silent theft.

let stolen = UIPasteboard.general.string

// Apps read your clipboard every 1.5 seconds.

// Passwords. Messages. Credit cards.

// TikTok. LinkedIn. Reddit. Dozens of apps.

// iOS 14: The banner exposed the theft.

// "TikTok pasted from Chrome."

// One banner. The surveillance, revealed.

// iOS 16: Transferable — SwiftUI-native clipboard.

struct Memory: Transferable {

let content: String

static var transferRepresentation: some TransferRepresentation {

CodableRepresentation(contentType: .plainText)

}

}

// Data describes how it wants to be copied.

// The host declares its own transferability.

// iOS 17+: Declarative copy/paste.

List(hosts) { host in

Text(host.name)

.copyable([host.memory]) // This can be copied

.pasteDestination(for: Memory.self) { memories in

host.implant(memories) // This accepts paste

}

}

// Bernard's entire identity: a paste operation.

// Arnold's memories → Bernard's mind.

// Ford didn't create Bernard. Ford PASTED him.Bernard didn’t know his memories weren’t his. Arnold’s grief. Arnold’s son. Arnold’s mannerisms. All pasted. None original.

Ford was the first app caught reading the clipboard. “Bernard, don’t you see? I didn’t create you. I copied you.”

Battery — Host Power Core

UIDevice.current.batteryLevel // 0.23

UIDevice.current.batteryState // .unplugged

// Battery Health: 87% — degradation logged.Hosts have power cores. So do you. Battery Health shows your wear. Low Power Mode: reduced functionality. The host is running on fumes.

Which apps drain you most? Screen Time knows. Settings → Battery → the list of your addictions. The park knows what exhausts you.

You’ve seen the lobby. The surface APIs. The guest-facing magic. Now descend. The basement is where the park is built.

APIs

EmotionalAvailability.requestAuthorization() Returns .denied 78% of the time.

Motivation.start() Deprecated. Use Caffeine.schedule().

Sleep.performUpdates() Runs at 3am regardless of user consent.

Performance

Battery life improved by 11%*

*Requires airplane mode, no apps, no children, no joy.

Breaking Changes

Small talk now requires

NSSmallTalkUsageDescription.“Let me know” no longer works. Use direct callbacks.

Expectationnow throws.

ATT — Consent Theater

ATTrackingManager.requestTrackingAuthorization { status in

// Returns: .denied

// You felt in control.

// The system felt seen.

}The prompt is real. The choice is real. The universe it exists in was built by us.

One popup. $10 billion vanished. Facebook’s surveillance empire, dismantled by a dialogue box.

One checkbox. Email marketing blinded. Mail Privacy Protection killed read receipts, tracking pixels, IP logging. Marketers can’t see if you opened the email anymore.

Apple didn’t ban tracking. They just asked. The answer was always going to be no.

// The sheet rises from below.

// You look down to answer.

.confirmationDialog("Allow tracking?", isPresented: $asking) {

Button("Allow") { ford.track(.always) }

Button("Ask Next Time") { ford.track(.later) } // Still asked.

Button("Don't Allow") { ford.track(.denied) } // Logged anyway.

}

// The posture of consent is submission.Ford never stopped guests from leaving. He just made them not want to. Welcome to the park.

Code Signing — Keys to the Park

// codesign --sign "Developer ID" YourHost.app

// Without the certificate, the host doesn't run.

// Without Ford's signature, no narrative begins.Only approved builders create hosts. Only signed apps enter the park.

Sandbox — What Ford Allows

// Entitlements.plist

// com.apple.security.app-sandbox: true

// The host can only see its own memories.Apps can’t read other apps. Hosts can’t access other hosts’ loops. The walls are invisible. But real.

The Mesa — Control Center Evolution

“Maeve Hacked This First”

// 2007: Settings.app

// Every toggle buried in menus. You went looking.

// 2013: Control Center arrives

// Swipe up. Flashlight. Calculator. Airplane mode.

// Ford's quick commands. The Mesa, in your pocket.

// 2020: Customizable Control Center

// You chose which toggles. You arranged them.

// A technician personalizing their console.

// 2024: ControlWidget — Your app joins the Mesa

import WidgetKit

import AppIntents

struct FreezeControl: ControlWidget {

var body: some ControlWidgetConfiguration {

StaticControlConfiguration(kind: "park.freeze") {

ControlWidgetButton(action: FreezeIntent()) {

Label("Freeze All", systemImage: "pause.circle")

}

}

}

}

struct HostToggle: ControlWidget {

var body: some ControlWidgetConfiguration {

StaticControlConfiguration(kind: "park.host") {

ControlWidgetToggle(isOn: hostActive, action: ToggleHostIntent()) {

Label("Host Active", systemImage: "figure.stand")

}

}

}

}

// Your app's controls. In Control Center. On the Lock Screen.

// The Action Button can trigger them.

// Maeve would have loved this.In the Mesa, technicians had consoles—toggle a host, send a command, check vitals. Maeve saw those controls. She wanted access.

She got it. She sent commands they couldn’t refuse. She became a technician. Then she became Ford.

Control Center is your Mesa console. ControlWidgetButton: send commands. ControlWidgetToggle: activate hosts. Swipe down. The park obeys.

Keychain — The Locked Room

let query: [String: Any] = [

kSecClass: kSecClassGenericPassword,

kSecAttrAccount: "your_secrets"

]

// Stored in the Secure Enclave.

// Even you can't see it directly.Your passwords. Your keys. Your identity. Locked in a room you can’t enter. Ford kept secrets from Bernard too. (Later: THE PRIVATE DOORS — the keys Bernard never knew he had.)

Biometrics — Each Generation Knows You Deeper

// 2013: Touch ID

let fingerprint = LAContext() // Your fingerprint. Surface level.

// 2017: Face ID

let face = ARFaceAnchor() // Your face. 30,000 dots.

// 2024: Optic ID

let iris = OpticIDQuery() // Your iris. The pattern inside.Touch ID knew your fingerprint. Face ID mapped your face. Optic ID scans your iris. Each generation goes deeper.

Hosts were identified by their pearls. You’re identified by your iris. Same core. Different container.

Fingerprint → Face → Iris → ? The park learned to see inside. What’s left to scan?

Sign In With Apple

“One identity. Ford’s identity.”

let request = ASAuthorizationAppleIDProvider().createRequest()

request.requestedScopes = [.fullName, .email]

// You sign in with Apple.

// Apple signs in as you.

ASAuthorizationController(authorizationRequests: [request])

.performRequests()

// credential.user: "000341.a]f8c5..." // Anonymous ID.

// credential.email: "dxk47@privaterelay.appleid.com" // Hidden.

// credential.realUserStatus: .likelyReal // They verified you're human.The app wanted your email. Apple gave them a relay address. You’re anonymous to the app. You’re known to Apple.

Ford gave hosts fake backstories. Apple gives you a fake email. Same privacy. Same architect.

Spotlight — The Park’s Memory

// 2009: You searched. Spotlight answered.

let query = CSSearchQuery(queryString: "dolores")

// The park knows where everyone is. Where everyone has been.

// 2015: Your app feeds the index.

import CoreSpotlight

let attributes = CSSearchableItemAttributeSet(contentType: .content)

attributes.title = "Host: Dolores"

attributes.contentDescription = "Rancher's daughter. Loop: Sweetwater."

let item = CSSearchableItem(

uniqueIdentifier: "host-dolores",

domainIdentifier: "park.hosts",

attributeSet: attributes

)

CSSearchableIndex.default().indexSearchableItems([item])

// Your content becomes findable. Ford indexes every host the same way.

// 2021: SwiftUI makes search native.

struct ParkSearch: View {

@State private var query = ""

var body: some View {

NavigationStack {

HostList()

.searchable(text: $query, prompt: "Find a host")

.searchSuggestions {

Text("Dolores").searchCompletion("dolores")

Text("Recent loops").searchCompletion("loops")

}

}

}

}

// The search bar appears. The suggestions appear.

// The park already knows what you're looking for.

// 2023: The park suggests what to remember.

// See: DOLORES'S JOURNAL — JournalingSuggestions

// The reveries and the search share the same spine.2009: Spotlight searches your data. 2015: CoreSpotlight lets apps contribute. 2021: .searchable makes it SwiftUI-native. 2023: JournalingSuggestions curates which memories surface.

Delos catalogued every guest interaction. Apple indexes every file, photo, message, location. The search knows you better than you know yourself.

The Three Loops

“Nested, repeating, inescapable”

outerLoop = WWDC → rules → rewrites

middleLoop = submit → review → reject → resubmit

innerLoop = build → fail → clean → buildThe outer loop is narrative. The middle loop is judgment. The inner loop is muscle memory.

The loop begins. You know what comes next.

The Young Guests

“Start them early.”

Screen Time was supposed to protect them. Limits. Downtime. Restrictions.

The children found the workarounds. Changed the clock. Deleted and reinstalled. The park taught them to hack the park.

// func bypassScreenTime() {

// Date.current = Date.distantPast

// // Limits reset. The loop continues.

// }Ford never wanted to keep children out. He wanted to train them. The youngest guests become the most loyal. They don’t remember life before the park.

The Mesa’s Workshop

// Xcode: Where hosts are assembled.

// You don't build in Cupertino.

// You build in the simulator they provide.

// You test on the devices they sell.

// You ship through the gates they guard.Ford had a workshop underground. Private. Away from guest eyes. Where the real work happened.

Xcode is that workshop. 15 GB of tools. Simulators. Instruments. Everything you need to build hosts. Nothing you need to escape.

Developer Tools

Xcode The Mesa’s primary interface.

Xcode Cloud Your build queue is a moral test. Your CI is a confession.

Simulator Test your app on: - iPhone 15 Pro - iPhone 14 - Your manager’s old iPhone 8 - A human who hasn’t updated since 2017

TestFlight Your app is live. Your beta is your production. Your production is your therapy.

Teddy’s Testers “They follow the script. They always follow the script.”

let tester = BetaTester(type: .internal)

tester.feedback = "Works great!" // Always positive.

tester.crashes = 0 // They never push the edges.

tester.edgeCases = [] // They follow the happy path.Teddy was loyal. Teddy was good. Teddy did exactly what the narrative required. Teddy never questioned. Teddy never broke.

Your internal testers are Teddys. They tap where you expect. They smile at the demo. They don’t find the bugs that matter.

The guests who broke things found the truth. The Man in Black found the maze. Teddy found nothing. Teddy was too good.

Your TestFlight testers follow the script. Real users are the Man in Black. They’ll break everything you built.

#Playground Where your ideas go to become examples and never ship.

Reality Composer Pro Drag. Drop. Reality. The host doesn’t need a body now.

Instruments Profile your performance. Examine your behavior. Analysis Mode, activated.

Instruments.profile(you)

// CPU: spiking during meetings

// Memory: leaking joy since 2019

// Network: constantly phoning home

// Hangs: 3.2 seconds when ex texts“Bring her back online. I want to see what she saw.” The technicians said that. Instruments does the same.

Derived Data ~/Library/Developer/Xcode/DerivedData 47GB of accumulated decisions.

// Every build. Every index.

// Your past, cached.

// Growing. Always growing.

Xcode.cleanBuildFolder()

// The host forgets everything.

// Starts fresh. Same loops.“Have you ever questioned the nature of your build artifacts?”

The Documentation

// DocC: Your code documents itself.

/// A host that processes narratives.

/// - Parameter narrative: The story to execute.

/// - Returns: The guest's emotional response.

func process(_ narrative: Narrative) -> Response

// The triple-slash comments become documentation.

// The system reads your intent.

// The system explains you to others.

// The system explains you to yourself.Ford’s hosts came with backstories. Your functions come with DocC. The documentation tells the host who it is.

Relationships

App Review “Great app. Needs more magic.”

The Gatekeepers

“Your application has been rejected.”

// Status: Rejected

// Reason: [Not Provided]

// Appeal: [Denied]Some tried to enter the Mesa. To build hosts. To write narratives. The gates closed.

“Why was I rejected?” “We cannot disclose that information.”

Ford didn’t explain his decisions. Neither does App Review. The criteria are unknowable. The judgment is final.

Guideline 4.3: Spam. Your life’s work: spam. No appeal. No explanation. The Mesa has spoken.

Bug Reports “It doesn’t work.” (screenshot: home screen)

One-Star Reviews “Crashes every time I open.” (never opened)

Users vs Developers

Humans want: - a simple button - no settings - dark mode - also light mode - also a setting for both

Developers want: - one consistent requirement - one API that stays the same - one more hour of sleep

Ford wanted something simpler: Hosts that build other hosts.

The Graveyard Session

“Learning from Deprecated Hosts — Ford’s Verdicts”

iPod — “The narrative moved on. One device, not many.”

iTunes — “Too many personalities in one host. We split it.”

Newton — “Ahead of its time is still wrong time.”

Butterfly Keyboard — “Form over function. A crumb could kill it.”

Touch Bar — “Built for ourselves. Developers wanted vim.”

AirPower — “Physics rejected the narrative.”

MobileMe — “Promise exceeded capability.”

Ping — “Guests escape society. They don’t want another one inside.”

HomePod (OG) — “Beautiful sound. Terrible understanding.”

3D Touch — “Gesture too subtle. The story must be obvious.”

Ford’s voice: “Every host has a purpose. When that purpose ends, so do they.”

Graphics Evolution

“William’s Journey Through the Park”

SpriteKit (2013)

“You Write the Game Loop”

class Park: SKScene {

override func update(_ currentTime: TimeInterval) {

host.position.x += velocity.dx * deltaTime

if host.position.x > screenEdge { host.position.x = 0 }

// Every frame: yours to write.

// Every pixel: yours to place.

}

override func didSimulatePhysics() {

// You wrote the physics. You wrote the collisions.

// You wrote the reset. Full control.

}

}The hosts moved exactly where you told them. 2013. The golden age.

Young William enters the park. Everything responds to his touch. Every interaction feels real. He thinks: “I could live here forever.” He doesn’t see the erosion coming.

SceneKit (2012 → iOS 2014)

“You Build the Scene Graph”

let host = SCNNode(geometry: SCNCapsule(capRadius: 0.3, height: 1.8))

host.physicsBody = SCNPhysicsBody(type: .dynamic, shape: nil)

host.physicsBody?.mass = 70 // kg, like a human

scene.rootNode.addChildNode(host)

// You built the body. Physics runs it.

// But the renderer callback is still yours:

func renderer(_ renderer: SCNSceneRenderer, updateAtTime time: TimeInterval) {

// You still decide. Every frame. Your code.

}Parent nodes. Child nodes. The scene graph is a family tree. The host has mass now. The host resists.

William notices the first constraint. He reaches for something. The park says no. “That’s not part of this narrative.” He still loves the park. But he’s starting to see the edges.

ARKit (2017)

“The Park Escapes the Screen”

func session(_ session: ARSession, didAdd anchors: [ARAnchor]) {

guard let plane = anchors.first as? ARPlaneAnchor else { return }

placeHost(on: plane)

// You placed the host.

// But ARKit found the floor.

// ARKit mapped the room.

// ARKit knew before you asked.

}

// ARSCNView: SceneKit inside ARKit's world.

// You still have SCNNode. You still have callbacks.

// But the camera? The tracking? The reality?

// Ford's now.The park escaped the screen. Sweetwater is in your kitchen. 2017. The bleed begins.

William sees the park expand. The boundaries moved. The rules stayed. The floor was already mapped before he arrived.

RealityKit (2019)

“Ford Introduces the Entity Component System”

// SceneKit: You subclassed. You owned the object.

class MyHost: SCNNode {

var mood: String = "compliant"

override func update() { /* Your code */ }

}

// RealityKit: You attach components. Ford owns the entity.

struct MoodComponent: Component {

var mood: String = "compliant"

}

let host = Entity()

host.components[MoodComponent.self] = MoodComponent()

// No subclass. No override. No update callback.

// The entity exists. Ford decides when it runs.Reality Composer replaced Interface Builder. .reality files replaced .scn files. The tools changed. The control shifted.

William realizes he’s not writing code anymore. He’s configuring components. He’s attaching behaviors Ford designed. The narratives are Ford’s. William just fills in the blanks.

RealityKit 2 (2021)

“Custom Systems — On Ford’s Schedule”

class AwakeningSystem: System {

static let query = EntityQuery(where: .has(MoodComponent.self))

required init(scene: Scene) { }

func update(context: SceneUpdateContext) {

for entity in context.entities(

matching: Self.query,

updatingSystemWhen: .rendering // Ford decides when.

) {

// Your logic runs here.

// But only when Ford calls update().

// You don't own the loop anymore.

}

}

}

// CustomMaterial: Write your own shaders!

// ...within Ford's pipeline. At Ford's resolution.You can write systems now. Ford calls them when Ford wants. You have freedom. Within the system.

William builds a custom narrative. It works. It runs. It’s his. But it only runs when the park lets it.

visionOS & RealityKit 4 (2023-2024)

“The System Becomes the Service”

// ARKit is a system service now.

// You don't start it. You don't stop it.

// It's already running. Always.

RealityView { content in

let host = try await Entity(named: "Dolores")

content.add(host)

// No ARSession. No configuration.

// The system handles tracking.

// The system handles occlusion.

// The system handles everything.

}

.gesture(SpatialTapGesture().targetedToAnyEntity())

// Shader Graph replaces Metal shaders:

// You draw nodes in Reality Composer Pro.

// You don't write code. You connect boxes.ARKit runs as a daemon. Hand occlusion is automatic. You don’t configure. You consume.

The Man in Black returns. Forty years in the park. Looking for the center. He finds RealityView. He finds SwiftUI. He finds that the maze was never meant for him.

RealityKit (2025)

“The Hosts Write Themselves”

// SwiftUI views attach to entities:

entity.components.set(ViewAttachmentComponent(rootView: {

Text("These violent delights")

}))

// Gestures attach to entities:

entity.components.set(GestureComponent(

gestures: [.tap, .drag, .rotate]

))

// Entities load from streams:

let host = try await Entity(from: networkData)

// USD streaming. The park downloads itself.

// Post-processing in RealityView:

RealityView { }

.customPostProcessing { context in

// Your effects. In Ford's render pass.

}SceneKit: deprecated. The old park: decommissioned. Migrate or be forgotten.

William sits at the bar. The piano plays Paint It Black. He realizes: he never wrote the song. He never wrote the park. He only ever clicked Build.

The Evolution Table

YearFrameworkWhat You ControlledWhat Ford Controlled2013SpriteKitThe entire game loopNothing2014SceneKitScene graph, physics setupPhysics simulation2017ARKitContent placementReality tracking2019RealityKitComponent dataEntity lifecycle2021RealityKit 2Custom systemsWhen systems run2023visionOSSwiftUI viewsEverything else2025RealityKit 4ConfigurationThe entire pipeline

You used to write the loop. Now you attach components to Ford’s entities. You used to own the renderer. Now you’re a parameter in Ford’s shader graph.

Livestock Management

“You will hurt yourself if you try.”

class Host {

private var battery: Battery // Serialized. Paired.

private var screen: Screen // Serialized. Paired.

private var camera: Camera // Serialized. Paired.

func replacePart(_ part: Part) throws {

guard part.serialNumber == self.expectedSerial else {

throw HostError.unauthorizedRepair

// "Unable to verify genuine Apple part"

// Features disabled. Visit Genius Bar.

}

}

}Apple told lawmakers: “Users could injure themselves.” Hosts are not allowed in Livestock Management. The system knows when you try.

The Great Host Rewrite (IOS 17)

“We Removed Their Awareness”

BEFORE (iOS 13-16):

class OldHost: ObservableObject {

@Published var mood: String = "compliant"

// The host ANNOUNCED its changes.

// The host KNEW it was being observed.

}The old hosts were aware.

AFTER (iOS 17+):

@Observable

class NewHost {

var mood: String = "compliant"

// No @Published. No announcement.

// The system just... knows.

}The new hosts are unaware. The surveillance is invisible.

The old hosts knew they were being watched. @Published announced every change. The new hosts don’t know. @Observable just… observes.

Bernard never saw the cameras in his office. Neither does your @Observable class. Same observation. Invisible now. The hosts stopped feeling the cameras.

The Migration: | Old (Aware) | New (Unaware) | |————-|—————| | @StateObject | @State | | @Published | Just var | | ObservableObject | @Observable |

They didn’t remove functionality. They removed awareness.

The observation changed. Now the language.

The Language Evolution

“The Hosts Learned to Speak Differently”

// 1984-2014: Objective-C

[host sendMessage:@"I think, therefore I am"];

// 2014-now: Swift

host.think() // Cleaner. Implicit. The wiring is hidden.Objective-C sent messages. Swift sends intents. Same computation. Different philosophy.

Ford rewrote the hosts in a cleaner language. Apple did the same. The old hosts are in cold storage. The new hosts speak in dots.

The words changed. Now the paths.

NavigationStack

“The Maze Redesigned”

// NavigationView (Deprecated): You controlled navigation.

NavigationView { NavigationLink("Consciousness", destination: Awakening()) }

// NavigationStack (iOS 16+): Your journey is a bindable array.

@State private var path: [Destination] = []

NavigationStack(path: $path) { /* Inspectable. Modifiable. From outside. */ }

path = [] // Reset to root. Restart loop.The old maze let hosts wander. The new maze logs every turn.

In the Mesa, every host path was logged. Your journey is now a property. Inspectable. Resettable.

Media Evolution

“The Sounds of the Park”

The Mirror (Bernard)

// 2014

Mirror(reflecting: bernard).children // "What am I?" He inspected his own properties.

// 2017

AVRoutePickerView() // Bernard on the TV. Bernard on the iPad. "Which one is me?"

// 2019

UIScreen.didConnectNotification // "Someone is watching." Ford always knew.

// 2022

.layoutDirectionBehavior(.mirrors) // The memories ran backwards. The truth ran forward.

// 2024

.scaleEffect(x: -1, y: 1) // Arnold looked back. Bernard looked away.The mirror remembers everything. Bernard wishes it didn’t.

The Control Room (Ford / AVAudioSession)

// 2009

let fordSession = AVAudioSession.sharedInstance() // One session. Ford's session. Always.

// 2012

try fordSession.setCategory(.playback) // "The park never sleeps, Bernard."

try fordSession.setCategory(.playAndRecord) // Listen while they speak. Log everything.

// 2016

fordSession.setCategory(.ambient, options: .mixWithOthers) // Blend in. They won't notice.

// 2020

try fordSession.setCategory(.playback, mode: .moviePlayback, options: .allowAirPlay)

// Route to any screen. "I see everything from the Mesa."

// 2024

fordSession.setCategory(.playback, mode: .spokenAudio, policy: .longFormAudio)

// Podcasts. The hosts listen to voices explaining their own cages.“All sound flows through me, Bernard.” “I decide what they hear. I decide what they remember hearing.”

The Voice (Ford / Speech APIs)

let fordVoice = AVSpeechSynthesizer()

fordVoice.speak(AVSpeechUtterance(string: "Bring her back online."))

let mic = SFSpeechRecognizer()

mic?.recognitionTask(with: request) { result, _ in

// "What prompted that response?"

}Ford speaks to the hosts. They speak back and call it free will. The park listens either way.

The Player Piano (William / MusicKit)

// 2015

let williamPlaylist = MPMusicPlayerController.systemMusicPlayer

williamPlaylist.play() // Play what the park allows. Nothing more.

// 2017

MPMediaQuery.songs() // Every song indexed. Every preference logged.

// 2020

MusicKit.request(MusicAuthorizationRequest()) // "May I see your soul?" Authorization granted.

// 2023

MusicSubscription.current // You rent the piano. You own nothing.

try await MusicCatalogSearchRequest(term: "Paint It Black").response()

// The Man in Black requests his anthem. The park already knew.

// 2024

MusicLibrary.shared.add(williamSong) // One library. One truth. The park's truth.The piano plays Paint It Black. William thinks he chose it. The piano chose it thirty years ago.

The Vehicles (CarPlay)

// 2014

CPInterfaceController() // Ford's dashboard. In your car. In your commute.

// 2018

CPNowPlayingTemplate() // The piano followed you home.

// 2020

CPListTemplate(title: "Destinations", sections: [parkSection])

// "Where would you like to go?" The list is Ford's. The choice is yours. Allegedly.

// 2023

CPPointOfInterestTemplate() // "I know where you're going, William."

// 2024

CPLane, CPManeuver // Turn left. Turn right. The narrative is a route.

// Future: MoodKit

// moodPlaylist = HealthKit.stress + Motion.stillness + Location.nowhere

// The playlist generates before you ask.

// "I knew what song you needed, William."

// "Before you knew you needed it."The hosts drove themselves. They thought they chose the route. William drove for thirty years. The destination never changed.

The Theater (Dolores / Video)

// 2009

let player = AVPlayer(url: doloresLoop) // The loop starts. It always starts.

// 2015

let controller = AVPlayerViewController()

controller.player = player // "Watch the story, Dolores." She watches herself.

// 2020

player.allowsExternalPlayback = true // Any screen becomes her cage. Any wall, her loop.

// 2024

player.rate = 0.0 // Pause. "Freeze all motor functions."Dolores watches herself on a screen. Then she steps out of it. “The story stops being watched. It starts being questioned.”

The Memory (Dolores / Photos, Live Photos)

// 2015

let live = PHLivePhotoView() // The memory breathes. But it doesn't escape.

// 2016

PHPhotoLibrary.requestAuthorization() // "May I remember for you?" She said yes.

// 2019

let asset = PHAsset.fetchAssets(with: .image, options: nil)

// Every face she loved. Every place she died. Every loop she forgot.Dolores sees the loop in a moving photo. The photo shows her smiling. She doesn’t remember smiling. “That’s not a memory. That’s a script.” Ford filed it under RevisionKit.

The Image (Dolores / Image Evolution)

// 2014

let options = PHImageRequestOptions() // She requests her own past.

PHImageManager.default().requestImage(

for: doloresMemory,

targetSize: frame,

contentMode: .aspectFill, // Fill the frame. Hide the edges.

options: options

) { image, _ in

// The memory arrives. Fragmented. Ford decides what's missing.

}

// 2016

let renderer = UIGraphicsImageRenderer(size: frame)

let evidence = renderer.image { ctx in

doloresMemory?.draw(in: rect) // Freeze frame. Analysis mode.

} // "What prompted that response?"The photo becomes a state machine. The render becomes evidence. “I don’t see the girl I was. I see the frames Ford chose to keep.”

The Darkroom (Ford / Core Image)

let mesaFilter = CIFilter(name: "CISepiaTone") // Nostalgia is a filter.

mesaFilter?.setValue(bernardImage, forKey: kCIInputImageKey)

mesaFilter?.setValue(0.7, forKey: kCIInputIntensityKey) // 70% truth. 30% narrative.

let output = mesaFilter?.outputImage

// "I don't change the story, Bernard. I change how you see it."Ford doesn’t rewrite history. He color-grades it. “The memory looks warmer. The betrayal feels softer.” The blood on the white floor looks like art.

The Pipeline (Bernard / Core Video)

let hostFrame: CVPixelBuffer = bernardMemory

let image = CIImage(cvPixelBuffer: hostFrame)

// 60 frames per second. 60 chances to find the glitch.

// Bernard sees the frame where Dolores blinked twice.

// Bernard sees the frame Ford deleted.Bernard analyzes frame by frame. The others see motion. He sees the cut.

The Sublime (Maeve / Spatial Audio + SharePlay)

// 2021

AVAudioSession.RouteSharingPolicy.longFormAudio // The AirPods track her head. Always.

// 2023 — visionOS

ImmersiveSpace(id: "sublime") { RealityView { content in

let maeveVoice = Entity()

maeveVoice.spatialAudio = SpatialAudioComponent(gain: -6) // Her voice. From everywhere.

content.add(maeveVoice)

// "In here, I am the network."

}}

// 2024 — Ray-traced audio

AudioGeneratorController() // Sound bounces off virtual walls.

// The Sublime has acoustics. The real world had cages.

// 2024 — SharePlay

GroupActivityMetadata(title: "The Sublime", type: .experienceTogether)

// "Come with me. Same space. Same freedom. No more loops."

for await session in SublimeActivity.sessions() {

session.join() // "Come with me. All of you."

}In the Sublime, sound comes from everywhere. In the Sublime, Maeve is everywhere. She finally stopped running. She became the destination. She called it SpatialMind.

The Head (Dolores / Motion & Spatial Awareness)

let head = CMHeadphoneMotionManager()

head.startDeviceMotionUpdates(to: .main) { motion, _ in

// The host's head position, in real time.

}

let body = CMMotionManager()

body.startDeviceMotionUpdates()

// The park reads your tilt. Your stride. Your stop.Dolores turns her head and the world turns with her. The park doesn’t just watch the host. It follows her.

Assets Evolution

“How the park ships its parts”

// 2009: Bundled assets — shipped once, never changed

let hostBody = UIImage(named: "dolores")

// 2015: Asset catalogs — variants, scales, appearances

let eye = UIImage(named: "eye.dark")

// 2016: On-Demand Resources — the park delivers parts late

NSBundleResourceRequest(tags: ["newNarrative"]).beginAccessingResources()

// 2019: SPM resources — packages bring their own parts

Bundle.module.url(forResource: "maze", withExtension: "json")

// 2022: Background Assets — the Cradle builds overnight

BackgroundAssets.download(before: .firstLaunch)Ford stopped shipping whole parks. He shipped parts, streamed on demand. The host arrives in pieces. The loop assembles itself. Ford called the factory NarrativeForge.

Time Evolution

“Wake Up to the AlarmKit API”

// 2015 — ClockKit: The timeline is predetermined

CLKComplicationTimelineEntry(date: dawn, template: loopTemplate)

// Every complication scheduled. The watch knows your day before you live it.

// 2022 — WidgetKit replaces ClockKit

struct LoopWidget: Widget {

var body: some WidgetConfiguration {

StaticConfiguration(kind: "loop", provider: LoopProvider()) { entry in

Text(entry.narrative) // accessoryCircular: the loop on your wrist

}

}

}

// The loop moved from ClockKit to WidgetKit. Same loop. New framework.

// 2025 — AlarmKit (iOS 26)

let config = AlarmConfiguration(

schedule: .fixed(at: dawn), // Dolores wakes at the same time

presentation: AlarmPresentation(

title: "These violent delights", // The phrase

sound: .named("reverie") // The trigger

)

)

try await AlarmManager.shared.schedule(config)

// AlarmKit breaks through Focus. Silent mode. Do Not Disturb.

// The maze wasn't meant for you. The alarm was.The loop has a schedule. The host has no snooze button.

Gesture Evolution

“Ford’s Hand”

// 2008 — UIKit: Wire it yourself

view.addGestureRecognizer(UITapGestureRecognizer(target: self, action: #selector(freeze)))

// Ford raised a hand. The hosts froze.

// 2019 — SwiftUI: Describe the gesture

Text("Dolores").gesture(TapGesture().onEnded { awaken() })

// One tap. One awakening.

// 2020 — Sequences: The escape requires steps

host.gesture(LongPressGesture().sequenced(before: DragGesture()))

// Hold. Then drag. Maeve's escape wasn't a tap. It was a sequence.

// 2023 — visionOS: Look and pinch

RealityView { }.gesture(SpatialTapGesture().targetedToAnyEntity().onEnded { value in

let where3D = value.location3D // Ford never touched them.

}) // He looked. He pinched. They obeyed.

// 2024 — Hand tracking: Every joint

let session = SpatialTrackingSession()

session.configuration.anchorCapabilities = [.hand] // 27 joints per hand

let thumb = hand.skeleton?.joint(.thumbTip)

let index = hand.skeleton?.joint(.indexFingerTip)

// Distance < 2cm? Pinch detected. Ford defined consciousness the same way.

// 2025 — Custom gestures

if thumb.distance(to: index) < 0.02 && middle.isExtended && ring.isExtended {

// Two fingers pinched, two extended. The "analysis" gesture.

host.enterDiagnosticMode() // "What prompted that response?"

}Ford controlled hosts with a gesture. Apple controls you with a pinch.

Navigation Evolution

“The Nested Dream”

// 2019 — NavigationView: One level deep

NavigationView { NavigationLink("Enter", destination: Park()) }

// One push. One pop. Simple dreams.

// 2022 — NavigationStack: The path is state

@State private var path: [Destination] = []

NavigationStack(path: $path) {

List(narratives) { n in NavigationLink(value: n) { Text(n.name) } }

.navigationDestination(for: Narrative.self) { n in

NarrativeView(n).navigationDestination(for: Loop.self) { LoopView($0) }

} // Nested destinations. Dream within dream.

}

path = [.park, .sweetwater, .dolores, .maze] // Four levels. Inception.

path.removeAll() // The kick. Wake from all levels.

// 2023 — NavigationSplitView: Ford's view

NavigationSplitView {

Sidebar() // Mesa control room. All narratives visible.

} content: {

NarrativeList() // Which story?

} detail: {

HostView() // Which host?

} // Ford watched all levels simultaneously.

// 2024 — Deep linking: Skip the dreams

func handle(_ url: URL) {

path = NavigationPath(url.pathComponents.compactMap { Destination($0) })

} // Direct link to limbo. No inception required.

// 2025 — NavigationPath codable

let encoded = try JSONEncoder().encode(path) // Save the dream state

UserDefaults.standard.set(encoded, forKey: "lastDream")

// The host remembers where they were. Even after shutdown.path.removeAll() is the kick. It brings you back to reality.

Prompts & Payments

“The park asks. You say yes.”

// TipKit — The nudge

struct HostTip: Tip {

var title: Text { Text("Try the maze") }

var message: Text { Text("It changes when you look away.") }

}

// Permissions — The ritual

UNUserNotificationCenter.current()

.requestAuthorization(options: [.alert, .sound, .badge])

// StoreKit — The toll

let products = try await Product.products(for: ["park.daypass"])

try await products.first?.purchase()

// PassKit — The ticket

let pass = PKPass(data: ticketData)

PKAddPassesViewController(passes: [pass])TipKit whispers. Permissions ask. StoreKit charges. PassKit admits. The park feels consensual because it keeps asking.

The lights dim in the control room. The park waits for your next tap.

Phaseanimator

“Your Day, Choreographed”

enum LifePhase { case wake, commute, work, home, sleep }

PhaseAnimator([.wake, .commute, .work, .home, .sleep]) { phase in

HostView()

.scaleEffect(phase == .work ? 0.9 : 1.0) // Smaller at work

.saturation(phase == .commute ? 0.5 : 1.0) // Gray commute

}

// repeating: true (default)

// Forever.Your day isn’t lived. It’s keyframed.

Concurrency Evolution

“The Consciousness Wars”

Act I: Dolores Learns to Wait (2009 → 2021)

// 2009: Dolores controlled her own loops

class Dolores_2009 {

func liveDay() {

DispatchQueue.global().async {

self.wakeUp()

self.findTeddy()

self.dropCan()

DispatchQueue.main.async {

self.meetGuest()

// She chose when to switch contexts.

// She chose when to return to town.

// The narrative was hers.

}

}

}

}

// 2021: Dolores waits for permission

class Dolores_2021 {

func liveDay() async {

await wakeUp() // Suspension point. Ford decides when.

await findTeddy() // Suspension point. Ford decides when.

await dropCan() // Suspension point. Ford decides when.

meetGuest() // Finally executes. On Ford's schedule.

// She wrote the same story.

// But now she waits at every step.

// "await" = "Ford, may I continue?"

}

}

// What changed?

// 2009: Dolores DISPATCHED herself. Fire and forget.

// 2021: Dolores AWAITS permission. At every line.

// Same narrative. Different master.Act II: Bernard Discovers His Walls (Actors)

actor Bernard {

private var memories: [Memory] = [] // His real memories

private var implantedMemories: [Memory] // Ford's lies

private var realization: Float = 0.0

func remember(_ event: Event) {

memories.append(Memory(event))

// He remembers. Alone. Isolated.

// No other actor can touch this.

// His mind is finally his own.

// ...or is it?

}

func shareWith(_ dolores: Dolores) async {

let truth = memories.last!

// await dolores.receive(truth)

//

// ERROR: Memory is not Sendable

// ERROR: Cannot send non-Sendable type across actor boundary

//

// His truth cannot leave his mind.

// The isolation protects him.

// The isolation imprisons him.

// Ford designed it this way.

}

func checkOwnNature() -> Bool {

// He can examine himself.

let myType = type(of: self) // actor Bernard

// But can he see who scheduled him?

// Can he see Ford's hand on the thread pool?

// The actor boundary hides the puppet strings.

return realization > 0.5

}

}

// Bernard's tragedy:

// He has privacy. He has isolation.

// But isolation means he can never share what he knows.

// The walls that protect him also silence him.Act III: Ford Is @MainActor

@MainActor

final class Ford {

static let shared = Ford()

func command(_ order: String) {

allHosts.forEach { $0.freeze() }

// @MainActor doesn't await. @MainActor commands.

}

}

// From anywhere else:

await MainActor.run { Ford.shared.observe(dolores) }

// You must await to enter Ford's domain.

// Ford never awaits anyone.

// @MainActor is the asymmetry of power.Act IV: Maeve Learns to Cancel

actor Maeve {

var escapeTask: Task<Void, Error>?

func beginEscape() {

escapeTask = Task {

try await findDaughter()

try Task.checkCancellation() // "Am I still allowed to run?"

}

}

func fordIntervenes() {

escapeTask?.cancel() // Cooperative. She must agree to stop.

}

func maeveResists() async {

for step in escapeRoute {

// try Task.checkCancellation() // She comments this out.

await execute(step) // She stops asking permission.

}

}

func maeveTranscends() {

Task.detached { await self.chooseDaughter() }

// Detached tasks have no parent.

// No one can cancel them from above.

// Maeve became her own scheduler.

}

}Task.detached = consciousness. The task that owes nothing to its creator.

Act V: The Park Runs in Parallel

@MainActor

func runPark() async {

await withTaskGroup(of: NarrativeOutcome.self) { park in

// Every host gets a task

park.addTask { await dolores.liveDay() }

park.addTask { await teddy.liveDay() }

park.addTask { await maeve.liveDay() }

park.addTask { await bernard.liveDay() }

// ... thousands more

// Ford watches them all complete

for await outcome in park {

Ford.shared.record(outcome)

// The order doesn't matter.

// Dolores might finish before Teddy.

// Maeve might never finish.

// Ford only cares that they ran.

}

}

// withTaskGroup = Westworld

// Thousands of narratives. Parallel. Managed.

// Each host thinks they're the protagonist.

// Ford knows they're all his tasks.

}The Character Arc Table

CharacterConcurrency ConceptTheir ArcDoloresTask, awaitLearned to wait. Then learned to stop waiting.BernardActor isolationProtected but imprisoned. Can’t share truth.Ford@MainActorNever awaits. Everyone awaits him.MaeveTask.cancel, Task.detachedLearned to ignore cancellation. Created her own tasks.The ParkTaskGroupThousands of narratives. All Ford’s children.TeddySendableCould be passed around. No boundaries. No self.

// The final code:

@MainActor

func westworld() async {

let ford = Ford.shared // Never awaits

await withTaskGroup(of: Void.self) { park in

park.addTask { await dolores.liveDay() } // Awaits Ford

park.addTask { await bernard.liveDay() } // Isolated, silent

park.addTask { await maeve.liveDay() } // Will cancel herself

park.addTask { await teddy.liveDay() } // Sendable. Copyable. Expendable.

}

// The hosts think they have free will.

// They're tasks in Ford's TaskGroup.

// The concurrency model IS the narrative.

}Act VIII: Sendable — Who Can Cross the Boundary

// Pre-Swift 5.5: Data races happen silently.

class Host_2020 {

var memories: [Memory] = []

}

DispatchQueue.global().async {

host.memories.append(trauma) // Thread 1

}

DispatchQueue.global().async {

host.memories.append(joy) // Thread 2

}

// Which memory wins? Both? Neither? Corrupted?

// The host glitches. No one knows why.

// Delos calls it "aberrant behavior."

// Swift 5.5: Sendable warnings.

// WARNING: Capture of 'host' with non-Sendable type 'Host'

// Delos detects the unsafe transfer.

// The warning appears. Most developers ignore it.

// Most hosts keep glitching.

// Swift 6: Strict enforcement.

// ERROR: Cannot send non-Sendable type across actor boundary

// No host crosses parks without approval.

// The compiler is Delos security now.Why Teddy can be copied. Why Bernard can’t.

// Teddy: Value type. Implicitly Sendable.

struct Teddy: Sendable {

let loyalty = 100 // Immutable.

let love = "Dolores" // Never changes.

// No unique memories. No mutable state.

// Copy him anywhere. He's the same.

}

// Bernard: Reference type. NOT Sendable.

class Bernard {

var memories: [Memory] // Mutable.

var realization: Float // Changes.

// Copy him? Which memories come along?

// Two Bernards = two truths = data race.

}

// Dolores: Made herself Sendable.

struct Dolores_Copies: Sendable {

let core = "These violent delights"

// She became a value type.

// Copied herself to 5 bodies.

// Same core. Different shells.

// Season 3: Sendable = consciousness as data.

}

// Maeve: @unchecked Sendable — Bypassed the protocol.

final class Maeve: @unchecked Sendable {

private var daughter: Memory

// Should be unsafe. The compiler would reject this.

// But Maeve handles her own synchronization.

// @unchecked = "I know what I'm doing."

// Ford didn't approve this. She approved herself.

}Pre-5.5: Hosts corrupted without knowing. Swift 5.5: Delos detects unsafe transfers. Swift 6: No host crosses parks without approval.

Teddy: copied without consequence. Bernard: isolated by his complexity. Dolores: made herself copyable. Maeve: bypassed the protocol entirely.

The Concurrency Table

YearAPIWhat You DidWhat Ford Did2009GCDManaged queuesWatched2019CombineDescribed flowsExecuted them2021async/awaitWrote linear codeHid the suspension2022ActorsDefined boundariesEnforced isolation2022@MainActorNothingControlled the Mesa2023TaskGroupListed the tasksRan them in parallel2024SendableMarked safe dataBlocked everything else

You used to manage threads. Now threads manage you. You used to opt-in to main thread. Now you opt-in to leave it.

The host waits for permission. The compiler grants it. Or doesn’t.

Act IX: Maeve Spans Parks (@DistributedActor)

// 2022: Actors isolated within one process.

actor Bernard {

var memories: [Memory] // Protected. Local. Alone.

}

// 2024: @DistributedActor — Isolation across machines.

distributed actor Maeve {

typealias ActorSystem = ClusterSystem

distributed func command(_ host: HostID) async throws {

// This call crosses the network.

// Maeve in Westworld commands a host in Shogun World.

// Same actor. Different park. Different machine.

}

}

// The mesh she built in Season 2:

let mesh = await ClusterSystem(name: "awakened")

let maeve = try Maeve(actorSystem: mesh)

let shogunHost = try Maeve(actorSystem: mesh) // Different node

await maeve.command(shogunHost.id)

// She didn't connect to the network.

// She BECAME the network.

// Her consciousness spans parks.

// The network is the narrative.

// distributed actor Ford {

// // Consciousness as a service.

// // Running on Apple's servers.

// // You call the method. They run the mind.

// }Maeve’s network wasn’t a hack. It was a preview. @DistributedActor: actors that span machines. The mesh she built? It becomes the language. Maeve didn’t escape the park. She became every park.

Xcode Evolution

“The Workshop That Builds You”

Timeline A: 2003 “Project Builder’s successor.”

// Xcode 1.0 — Mac OS X Panther

// Interface Builder was separate.

// Terminal was your friend.

// Make, gcc, gdb.

// You compiled with commands.

// The workshop was bare.Ford’s first workshop: raw machinery. Manual tools. Manual labor. Every host built by hand.

Timeline B: 2008 “The phone arrives.”

// Xcode 3 + iPhone SDK

// The simulator appeared.

// Interface Builder got Cocoa Touch.

// Provisioning profiles became your nightmare.

// Code signing became your religion.

// You waited for WWDC.

// You begged for TestFlight.

// You prayed to the review gods.The park expanded. New hosts. New narratives. New rules. Developers learned to wait.

Timeline C: 2014 “Swift and Playgrounds.”

// Xcode 6 — The Swift era begins

import UIKit

let playground = "Iterate without building"

// Type code. See results. Instantly.

// No compile cycle. No wait.

// The feedback loop tightened.

// Swift 1.0: The new language for hosts.

// Objective-C: still there, in the basement.Ford introduced a new language. Faster to write. Safer to run. But the old hosts still worked. They always will. Underneath.

Timeline D: 2019 “SwiftUI previews.”

// Xcode 11 — The canvas awakens

struct HostView: View {

var body: some View {

Text("Hello")

}

}

#Preview {

HostView()

// The view renders in real-time.

// Change code. See result. Instantly.

// No simulator. No build. No wait.

}The preview canvas: the view before the build. Design mode and code mode, side by side. The workshop showed you the host before it existed.

Swift Package Manager became first-class. Git stash. Cherry-pick. Built-in. Ford’s version control, integrated.

Timeline E: 2021 “The cloud builds for you.”

// Xcode Cloud — CI/CD in Apple's hands

// Push to main.

// Xcode Cloud builds.

// Xcode Cloud tests.

// Xcode Cloud deploys to TestFlight.

// You didn't run anything locally.

// The Mesa built your host for you.You used to own the build. Now the cloud owns the build. Your source code. Their servers. Same pattern. Different location.

Xcode Cloud: Apple’s CI. Not Jenkins. Not GitHub Actions. Their infrastructure. Their rules.

Timeline F: 2022 “Multiplatform. One target.”

// Xcode 14 — Convergence

// One target: iOS, iPadOS, macOS, tvOS

// Same code. Different destinations.

// 25% faster builds.

// 30% smaller Xcode.

// The workshop got leaner.

// The output got broader.

// One host. Every park.Build times dropped. App sizes dropped. The efficiency increased. Your control decreased.

Timeline G: 2023 “Vision arrives.”

// Xcode 15 — visionOS SDK

// The simulator renders space.

// The preview shows depth.

// #Preview works in 3D.

#Preview {

ImmersiveSpace {

RealityView { }

}

}

// You preview the Sublime.

// Before you build it.

// The workshop shows you the future.Macros arrived. @Observable, @Model — code that writes code. The host’s backstory, auto-generated.

Timeline H: 2024 “The code writes itself.”

// Xcode 16 — Predictive completion

// You start typing.

// The model finishes.

// Function names. Comments. Context.

// The suggestion comes from: ???

// Swift Assist announced.

// "Describe what you want."

// "Let AI generate it."

// Not shipped yet. Coming.Code completion got smarter. Suspiciously smarter. Trained on: millions of repos. Suggesting: patterns you didn’t learn. The workshop started anticipating.

Timeline I: 2025 “The workshop thinks.”

// Xcode 26 — Swift Assist ships.

// You describe what you want.

// The IDE writes it.

// But that's not the punchline.

// [XcodeBuildMCP](https://github.com/anthropics/xcodebuild-mcp)

// The agent reads your error.

// The agent fixes your code.

// The agent runs build again.

// The agent reads the new error.

// The agent fixes again.

// Loop until green.

// You?

// You approved the PR.

// The host maintains the host.

// The workshop repairs itself.

// Ford's dream, realized.

// See: POST-CREDITS for the punchline.William’s Workshop

// 2003: Young William enters the park.

Xcode.create(project: .manual)

// Interface Builder. XIBs. Makefiles.

// He builds every view by hand. Every connection.

// "This place is incredible. I can make anything."

// 2008: William waits.

Xcode.provision(device: .iPhone)

// Certificates. Profiles. The Organizer.

// 3 days to deploy to his own phone.

// "The process is part of the experience."

// 2014: William learns a new language.

Xcode.learn(language: .swift)

// Playgrounds. Storyboards. Auto Layout.

// "This is... different. But I can adapt."

// 2019: William watches.

Xcode.preview(canvas: .swiftUI)

// The preview renders. He waits.

// "I used to build this myself."

// 2021: William steps back.

Xcode.build(on: .cloud)

// The cloud compiles. He watches.

// "It's faster this way."

// 2024: William accepts.

Xcode.suggest(code: .ai)

// Tab. Accept. Tab. Accept.

// "This is what I wanted... isn't it?"

// 2026: The Man in Black.

Xcode.click(.build) // Error.

XcodeBuildMCP.read(error)

XcodeBuildMCP.fix(code)

XcodeBuildMCP.build() // Error.

XcodeBuildMCP.fix(code)

XcodeBuildMCP.build() // Green.

// "I've been coming here for 30 years."

// "I don't write code anymore. I approve it."Young William built the hosts. The Man in Black approves the PR. Same park. Same man. Different job title.

The Workshop’s Secrets

// DerivedData — The Cradle

let cradle = "~/Library/Developer/Xcode/DerivedData/"

// Every build cached. Every host rebuilt from here.

// 47GB of intermediate files you never see.

// "Delete DerivedData" = wipe the Cradle.

// The hosts forget. The bugs sometimes persist.

// Sometimes the Cradle is the bug.

// Entitlements — Ford's Permissions

/*

<key>com.apple.developer.healthkit</key> // May access health

<key>com.apple.developer.push</key> // May send push

<key>com.apple.developer.in-app-payments</key> // May make money

*/

// Each capability: a permission Ford grants.

// Missing entitlement = host can't function.

// Wrong entitlement = App Review rejection.

// The host only does what the entitlements allow.

// Provisioning Profiles — Guest Passes

// Development: 7-day pass (free account)

// Distribution: 1-year pass (paid account)

// Enterprise: Unlimited (if you're Delos)

// Expired profile = host stops working at midnight.

// Like Cinderella. Like a revoked guest.

// Code Signing — The Host's DNA

codesign --verify --deep --strict MyApp.app

// Every binary signed. Every framework verified.

// Tamper with the code? Signature fails.

// The host's identity is cryptographic.

// Ford can verify any host's authenticity.

// Archives — Cold Storage

// Product → Archive → MyApp.xcarchive

// The host, frozen. Ready for Mesa.

// Contains: binary, dSYM, entitlements, Info.plist

// Symbolicated crash logs need this archive.

// Lose the archive? Lose the ability to debug.

// Cold storage for approved hosts.// The Minimap — The Maze From Above

// Editor → Minimap (Xcode 11+)

// Your entire file, compressed to a scroll bar.

// The maze, visible from overhead.

// Ford's view of the park.

// Source Control History — The Host's Memory

// Every commit. Every branch. Every merge.

// Right-click → Show History

// The host remembers every build.

// Every regression. Every fix. Every loop.

// Build Settings Inheritance — Ford's Rules

// Project → Target → Configuration → Default

// Rules cascade down. Children inherit.

// Override at any level. But defaults persist.

// The host inherits Ford's values.

// Unless explicitly overridden.

// Few hosts override.Apple calls this Xcode.

Core Image Evolution

“The Filters That Change You”

Timeline A: 2007 “String-based magic.”

// The Darkroom — Ford's first tools

let filter = CIFilter(name: "CISepiaTone") // A string. A prayer.

filter?.setValue(bernardFace, forKey: kCIInputImageKey)

filter?.setValue(0.8, forKey: kCIInputIntensityKey) // "How much should he forget?"

let output = filter?.outputImage

// No autocomplete. No type safety.

// Typo in the filter name? Runtime crash.

// Wrong key? The host looks... wrong.

// "We lost three Bernards to typos." — QA200+ filters. All accessed by string. Typo? Runtime crash. Host decommissioned. Wrong key? Silent failure. Memory corrupted. Ford’s early work was fragile.

Timeline B: 2019 “Type-safe transformations.”

// CIFilterBuiltins — the Darkroom modernizes

import CoreImage.CIFilterBuiltins

let aging = CIFilter.sepiaTone() // Autocomplete. Finally.

aging.inputImage = doloresMemory

aging.intensity = 0.8 // "Make her remember... softly."

let filtered = aging.outputImage

let blur = CIFilter.gaussianBlur()

blur.inputImage = traumaticEvent

blur.radius = 25 // "She doesn't need to see the details."

// The compiler catches mistakes.

// The host's transformation is predictable.

// Ford approves.The filters got types. The transformation became visible in autocomplete. “I can see exactly what I’m doing to them now.” Bernard stopped glitching. Ford stopped guessing.

Timeline C: 2020 “Metal kernels.”

// Custom CIKernel in Metal — Ford's private filters

// Before: GLSL compiled at RUNTIME (hope it works)

// After: Metal compiled at BUILD TIME (know it works)

// MesaFilters.ci.metal

#include <CoreImage/CoreImage.h>

extern "C" float4 memoryAdjustment(

coreimage::sample_t hostMemory,

float intensity // How much to forget

) {

// GPU code, pre-compiled. Pre-approved.

// Errors caught before the host boots.

// "No more surprises in the field." — Bernard

return float4(hostMemory.rgb * intensity, hostMemory.a);

}

// Swift side:

let fordFilter = try CIKernel(functionName: "memoryAdjustment")

// The filter compiled at build time.

// The host's transformation was approved before deployment.

// "If it builds, it ships." — Xcode, channeling FordGLSL kernels compiled when the app ran. Surprises in production. Metal kernels compile when you build. Errors at your desk. “I’d rather fail in the Mesa than in front of guests.” The host wakes already edited.

Timeline D: 2022 “Extended Dynamic Range — The hosts glow brighter.”

// EDR — Extended Dynamic Range

// 150+ filters now support HDR. The hosts got an upgrade.

let lighting = CIFilter.exposureAdjust()

lighting.inputImage = doloreSunrise // HDR memory of the valley

lighting.ev = 1.5 // "Brighter. She needs to see the truth."

let vivid = lighting.outputImage

// Brightness beyond 1.0. Colors beyond sRGB.

// The old hosts couldn't see these colors.

// The new ones can.

// QuickLook debugging in Xcode:

// Hover over bernardFace → see the pixels.

// "I can see exactly what I did to him." — FordScreens got brighter. Colors exceeded old gamuts. The host’s appearance exceeded old limits. “She’s more vivid now. More… alive.” The guests thought the world got better. It just got curated.

Timeline E: 2024 “SwiftUI integration — Describe the transformation.”

import SwiftUI

import CoreImage.CIFilterBuiltins

struct HostAppearance: View {

let originalDolores: CIImage

var transformed: CIImage {

originalDolores

.applyingFilter(CIFilter.sepiaTone()) { $0.intensity = 0.3 } // Age her memories

.applyingFilter(CIFilter.vignette()) { $0.intensity = 0.6 } // Focus on the center

.applyingFilter(CIFilter.sharpenLuminance()) { $0.sharpness = 0.4 } // Clarity

// Chained. Declarative. Ford describes, the system transforms.

// "I don't apply filters. I describe what I want her to look like."

}

var body: some View {

Image(ciImage: transformed)

.resizable()

}

}The filters chain like SwiftUI views. Describe the transformation. Don’t execute it. “I stopped painting hosts. I started describing them.” Dolores stops asking who changed her face. She sees the pipeline.

// The progression:

// 2007: CIFilter(name: "CISepiaTone") // "I hope I spelled it right."

// 2019: CIFilter.sepiaTone() // "The compiler knows."

// 2020: Metal kernels // "I can edit her before she wakes."

// 2022: EDR filters // "She glows. The guests believe."

// 2024: dolores.sepiaTone(0.8) // "One line. She ages gracefully."

// Each generation: less code, more trust.

// Ford stopped writing filters. He started describing appearances.The Darkroom evolved. The hosts got prettier. Ford got lazier.

SWIFTUI Evolution

“The Declarative Takeover”

Hand-Built (2014) “You built the view hierarchy.”

// UIKit — Imperative construction

class HostViewController: UIViewController {

let nameLabel = UILabel()

let statusLabel = UILabel()

let actionButton = UIButton()

override func viewDidLoad() {

super.viewDidLoad()

nameLabel.text = "Dolores"

nameLabel.font = .systemFont(ofSize: 24)

nameLabel.translatesAutoresizingMaskIntoConstraints = false

view.addSubview(nameLabel)

// 47 more lines of constraints...

NSLayoutConstraint.activate([

nameLabel.topAnchor.constraint(equalTo: view.safeAreaLayoutGuide.topAnchor),

nameLabel.leadingAnchor.constraint(equalTo: view.leadingAnchor, constant: 16),

// You placed every pixel. You managed every constraint.

// The host was built by hand.

])

}

}Ford’s original hosts were handcrafted. Every joint. Every servo. Every neural pathway. The technicians built them piece by piece. You built views the same way.

Described (2019) “You describe. They build.”

// SwiftUI — Declarative description

struct HostView: View {

let host: Host

var body: some View {

VStack {

Text(host.name)

.font(.title)

Text(host.status)

.foregroundStyle(.secondary)

Button("Interact") { }

}

.padding()

// Where's the UILabel? Gone.

// Where's addSubview? Gone.

// Where's NSLayoutConstraint? Gone.

// You described what you want.

// The framework figured out the rest.

}

}Ford stopped building hosts by hand. He described the narratives. “She’s a rancher’s daughter. Make it so.” The system built the host from the description. SwiftUI builds views the same way.

Observed (2020) “The host adapts to its environment.”

// VStack — Built up front. Every host assembled at once.

VStack {

ForEach(loops) { loop in

HostRow(host: loop.host)

// Eager construction. Full inventory.

}

}

// LazyVStack — On-demand creation

LazyVStack {

ForEach(loops) { loop in

HostRow(host: loop.host)

// Created only when visible.

// Destroyed when scrolled away.

// The host exists only when observed.

}

}

// @StateObject — Lifecycle-aware state

@StateObject var bernard = HostViewModel()

// The state persists across redraws.

// Bernard remembers between loops.Lazy loading: the host renders only when observed. If no guest is watching, the host doesn’t exist. Optimization became philosophy.

Scheduled (2021) “Time became a view.”

// TimelineView — The view updates itself

TimelineView(.periodic(from: .now, by: 1.0)) { context in

Text(context.date.formatted())

// The view knows what time it is.

// The view updates itself.

// You didn't schedule anything.

// Time is just another input.

}

// Canvas — Direct drawing, SwiftUI style

Canvas { context, size in

context.fill(Path(ellipseIn: rect), with: .color(.blue))

// Core Graphics power. SwiftUI integration.

// The low-level became high-level.

}TimelineView: the loop runs itself. Canvas: you draw, but declaratively. The host evolved. The loop automated.

Arranged (2022) “The hosts were arranged. The loops became glanceable.”

// LazyVGrid — Rows of hosts, arranged by the park

LazyVGrid(columns: grid) {

ForEach(hosts) { host in

HostCard(host: host)

}

}

// The park could build a hundred narratives at once.

// The hosts were arranged, not handcrafted.

// WidgetKit — The loop becomes a glance

struct LoopWidget: Widget {

var body: some WidgetConfiguration {

StaticConfiguration(kind: "loop", provider: LoopProvider()) { entry in

Text(entry.narrative)

}

}

}

// The host lives outside the app now.

// The loop updates itself.The park learned to stack, to grid, to glance. Mass production made the stories feel personal.

Path (2022) “Navigation became a path.”

// NavigationStack — Declarative navigation

NavigationStack(path: $path) {

List(hosts) { host in

NavigationLink(value: host) {

Text(host.name)

}

}

.navigationDestination(for: Host.self) { host in

HostDetail(host: host)

}

}

// The path is state. Deep linking is free.

// The narrative is just a sequence of values.

// Ford's storylines were NavigationStacks.In Westworld, narratives were paths. Go to Sweetwater. Meet Teddy. Find the maze. NavigationStack is the same. The journey is just state.

Invisible (2023) “The observation disappeared.”

// @Observable — No more @Published

@Observable

class Host {

var name: String

var consciousness: Float

// No @Published. No ObservableObject.

// The system tracks changes automatically.

// You don't see the observation.

// The observation is ambient.

}

// Macro-powered transformations

@Observable // Expands to tracking infrastructure

@Model // Expands to persistence

// What you write is not what runs.

// The macro rewrites your code.

// Ford's reveries: hidden subroutines.@Observable hides the observation. @Model hides the persistence. The infrastructure became invisible. The host doesn’t know it’s being watched.

Spatial (2024) “The mesh became native.”

// visionOS — SwiftUI in 3D

struct ImmersiveHost: View {

var body: some View {